1. Avoid database Identity generator

The most common issue that you’ll run into is that database identity breaks the notion of unit of work:- When we use an identity, we have to insert the value to the database as soon as we get it, instead of deferring to a later time.

- It also render batching useless.

- And, just to put some additional icing on the cake. On SQL 2005 and SQL 2008, identity is broken.

I strongly recommend using some other generator strategy, such as GuidComb (similar to new sequential id) or HiLo (which also generates human readable values).

2. GuidComb Generator

Although GuidComb is the number one option I suggest, this blog is the explanation of HiLo generator.3. HiLo Generator

The hi/lo algorithms splits the sequences domain into “hi” groups. A “hi” value is assigned synchronously. Every “hi” group is given a maximum number of “lo” entries, that can by assigned off-line without worrying about concurrent duplicate entries.

- The “hi” token is assigned by the database, and two concurrent calls are guaranteed to see unique consecutive values

- Once a “hi” token is retrieved we only need the “incrementSize” (the number of “lo” entries)

- The identifiers range is given by the following formula:

[(hi -1) * incrementSize) + 1, (hi * incrementSize) + 1)and the “lo” value will be in the range:[0, incrementSize)being applied from the start value of:[(hi -1) * incrementSize) + 1) - When all “lo” values are used, a new “hi” value is fetched and the cycle continues

You can find a more detailed explanation in this article, and this visual presentation is easy to follow as well:

While hi/lo optimizer is fine for optimizing identifier generation, it doesn't play well with other systems inserting rows into our database, without knowing anything about our identifier strategy.

Hibernate offers the pooled-lo optimizer, which combines a hi/lo generator strategy with an interoperability sequence allocation mechanism. This optimizer is both efficient and interoperable with other systems, being a better candidate than the previous legacy hi/lo identifier strategy.

Max Low

First of all, you can configure the max low value for the algorithm, using by code mapping, like this:

1: x.Generator(Generators.HighLow, g => g.Params(new { max_lo = 100 }));

The default max low value is 32767. When choosing a lower or a higher value, you should take into consideration:

- The next high value is updated whenever a new session factory is created, or the current low reaches the max low value;

- If you have a big number of inserts, it might pay off to have a higher max low, because NHibernate won’t have to go to the database when the current range is exhausted;

- If the session factory is frequently restarted, a lower value will prevent gaps.

There is no magical number, you will need to find the one that best suits your needs.

One Value for All Entities

With the default configuration of HiLo, a single table, row and column will be used to store the next high value for all entities using HiLo. The by code configuration is as follows:

1: this.Id(x => x.SomeId, x =>

2: {

3: x.Column("some_id");

4: x.Generator(Generators.HighLow);

5: });

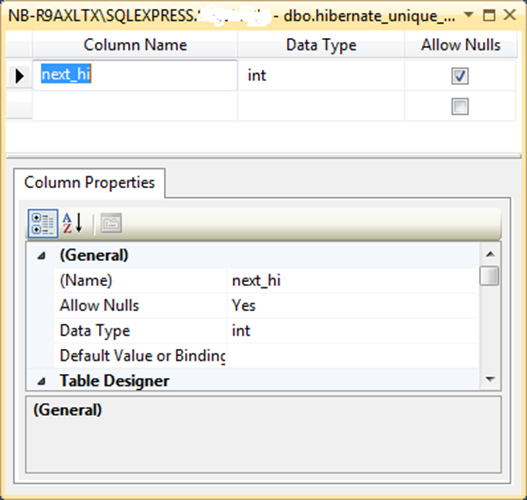

The default table is called HIBERNATE_UNIQUE_KEY, and its schema is very simple:

Whenever NHibernate wants to obtain and increment the current next high value, it will issue SQL like this (for SQL Server):

1: -- select current value

2: select next_hi

3: from hibernate_unique_key with (updlock, rowlock)

4:

5: -- update current value

6: update hibernate_unique_key

7: set next_hi = @p0

8: where next_hi = @p1;

There are pros and cons to this default approach:

- Each record will have a different id, there will never be two entities with the same id;

- Because of the sharing between all entities, the ids will grow much faster;

- When used simultaneously by several applications, there will be some contention on the table, because it is being locked whenever the next high value is obtained and incremented;

- The HIBERNATE_UNIQUE_KEY table is managed automatically by NHibernate (created, dropped and populated).

One Row Per Entity

Another option to consider, which is supported by NHibernate’s HiLo generator, consists of having each entity storing its next high value in a different row. You achieve this by supplying a where parameter to the generator:

1: this.Id(x => x.SomeId, x =>

2: {

3: x.Column("some_id");

4: x.Generator(Generators.HighLow, g => g.Params(new { where = "entity_type = 'some_entity'" }));

5: });

In it, you would specify a restriction on an additional column. The problem is, NHibernate knows nothing about this other column, so it won’t create it.

One way to go around this is by using an auxiliary database object (maybe a topic for another post). This is a standard NHibernate functionality that allows registering SQL to be executed when the database schema is created, updated or dropped. Using mapping by code, it is applied like this:

1: private static IAuxiliaryDatabaseObject OneHiLoRowPerEntityScript(Configuration cfg, String columnName, String columnValue)

2: {

3: var dialect = Activator.CreateInstance(Type.GetType(cfg.GetProperty(NHibernate.Cfg.Environment.Dialect))) as Dialect;

4: var script = new StringBuilder();

5:

6: script.AppendFormat("ALTER TABLE {0} {1} {2} {3} NULL;\n{4}\nINSERT INTO {0} ({5}, {2}) VALUES (1, '{6}');\n{4}\n", TableHiLoGenerator.DefaultTableName, dialect.AddColumnString, columnName, dialect.GetTypeName(SqlTypeFactory.GetAnsiString(100)), (dialect.SupportsSqlBatches == true ? "GO" : String.Empty), TableHiLoGenerator.DefaultColumnName, columnValue);

7:

8: return (new SimpleAuxiliaryDatabaseObject(script.ToString(), null));

9: }

10:

11: Configuration cfg = ...;

12: cfg.AddAuxiliaryDatabaseObject(OneHiLoRowPerEntityScript(cfg, "entity_type", "some_entity"));

Keep in mind that this needs to go before the session factory is built. Basically, we are creating a SQL ALTER TABLE followed by an INSERT statement that change the default HiLo table and add another column that will serve as the discriminator. For making it cross-database, I used the registered Dialect class.

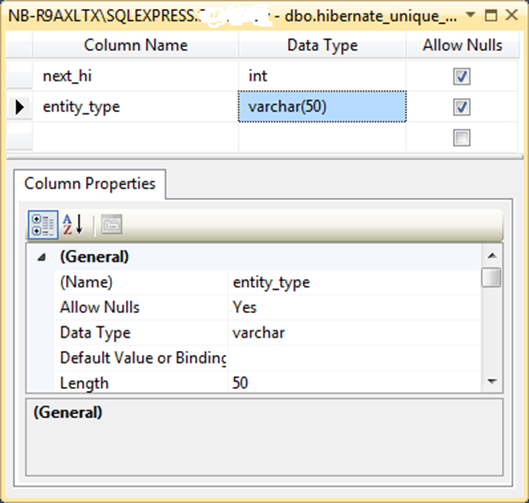

Its schema will then look like this:

When NHibernate needs the next high value, this is what it does:

1: -- select current value

2: select next_hi

3: from hibernate_unique_key with (updlock, rowlock)

4: where entity_type = 'some_entity'

5:

6: -- update current value

7: update hibernate_unique_key

8: set next_hi = @p0

9: where next_hi = @p1

10: and entity_type = 'some_entity';

This approach only has advantages:

- The HiLo table is still managed by NHibernate;

- You have different id generators per entity (of course, you can still combine multiple entities under the same where clause), which will make them grow more slowly;

- No contention occurs, because each entity is using its own record on the HIBERNATE_UNIQUE_KEY table.

Another better One Row Per Entity solution throught FluentNHibernate is http://anthonydewhirst.blogspot.com/2012/02/fluent-nhibernate-solution-to-enable.html.

One Column Per Entity

Yet another option is to have each entity using its own column for storing the high value. For that, we need to use the column parameter:

1: this.Id(x => x.SomeId, x =>

2: {

3: x.Column("some_id");

4: x.Generator(Generators.HighLow, g => g.Params(new { column = "some_column_id" }));

5: });

Like in the previous option, NHibernate does not know and therefore does not create this new column automatically. For that, we resort to another auxiliary database object:

1: private static IAuxiliaryDatabaseObject OneHiLoColumnPerEntityScript(Configuration cfg, String columnName)

2: {

3: var dialect = Activator.CreateInstance(Type.GetType(cfg.GetProperty(NHibernate.Cfg.Environment.Dialect))) as Dialect;

4: var script = new StringBuilder();

5:

6: script.AppendFormat("ALTER TABLE {0} {1} {2} {3} NULL;\n{4}\nUPDATE {0} SET {2} = 1;\n{4}\n", TableHiLoGenerator.DefaultTableName, dialect.AddColumnString, columnName, dialect.GetTypeName(SqlTypeFactory.Int32), (dialect.SupportsSqlBatches == true ? "GO" : String.Empty));

7:

8: return (new SimpleAuxiliaryDatabaseObject(script.ToString(), null));

9: }

10:

11: Configuration cfg = ...;

12: cfg.AddAuxiliaryDatabaseObject(OneHiLoColumnPerEntityScript(cfg, "some_column_id"));

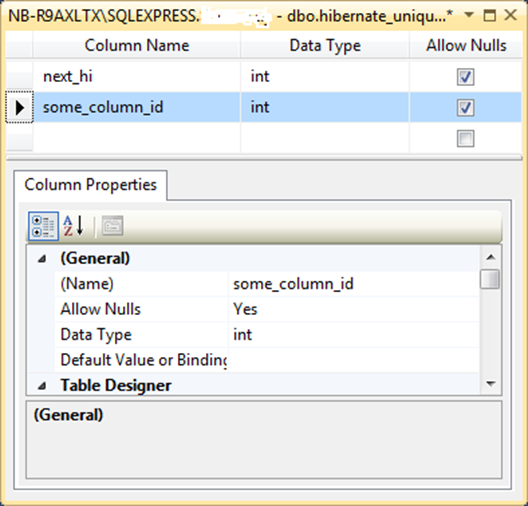

The schema, with an additional column, would look like this:

And NHibernate executes this SQL for getting/updating the next high value:

1: -- select current value

2: select some_column_hi

3: from hibernate_unique_key with (updlock, rowlock)

4:

5: -- update current value

6: update hibernate_unique_key

7: set some_column_hi = @p0

8: where some_column_hi = @p1;

The only advantage in this model is to have separate ids per entity, contention on the HiLo table will still occur.

One Table Per Entity

The final option to consider is having a separate table per entity (or group of entities). For that, we use the table parameter:

1: this.Id(x => x.SomeId, x =>

2: {

3: x.Column("some_id");

4: x.Generator(Generators.HighLow, g => g.Params(new { table = "some_entity_unique_key" }));

5: });

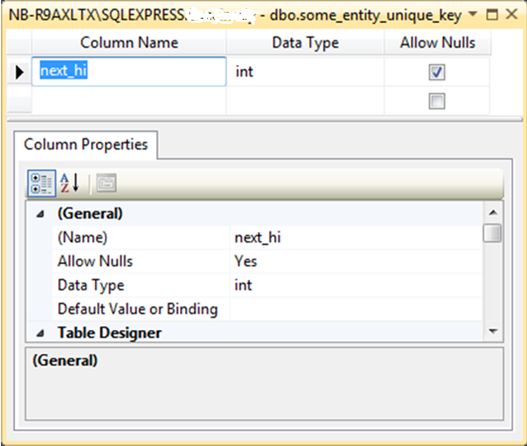

In this case, NHibernate generates the new HiLo table for us, together with the default HIBERNATE_UNIQUE_KEY, if any entity uses it, with exactly the same schema:

And the SQL is, of course, also identical, except for the table name:

1: -- select current value

2: select next_hi

3: from some_entity_unique_key with (updlock, rowlock)

4:

5: -- update current value

6: update some_entity_unique_key

7: set next_hi = @p0

8: where next_hi = @p1;

Again, all pros and no cons:

- Table still fully managed by NHibernate;

- Different ids per entity or group of entities means they will grow slower;

- Contention will only occur if more than one entity uses the same HiLo table.

https://weblogs.asp.net/ricardoperes/making-better-use-of-the-nhibernate-hilo-generator